5 minutes

Linear Space Lighting in 5 Minutes

Our eyes see things by recieving light emitted by or bounced off it. Monitors fool our eyes into seeing something by emitting the same amount of light with LEDs / LCD + backlight. In computer graphincs we aim to construct the realistic image data and transform it to correctly reach the user’s eye.

For example, in this photo of an apple, conceptually we used a camera to capture the intensity values of the scene, saved it to storage, and displayed on the screen the same or similar intensity values to recreate the intensity values of the scene thaat was captured. This makes the display look like the scene of an apple!

In a simplified world, we’d simply read these values, save them to disk, and throw them on the monitor, and call it a day. Of course in practice this runs into many problems. For example, if we simply read these values and threw them on disk in 8-bit, we would run into bitrate issues:

The above image shows the banding issues as a result of storing naive values and displaying them. Furthermore, in practice every device isn’t perfect 1:1 response in input to output; the camera sensor can affect this, analogue photo development can affect this, the digital bitrate can affect this as mentioned above, the monitor or dispplay device itself can affect this. We call this linearity; a camera response is more linear if it transfers closer to 1:1, as in 40 nits scene = 40 nits in image value, 50 nits in scene = 50 nits in captured image value.

Gamma Space

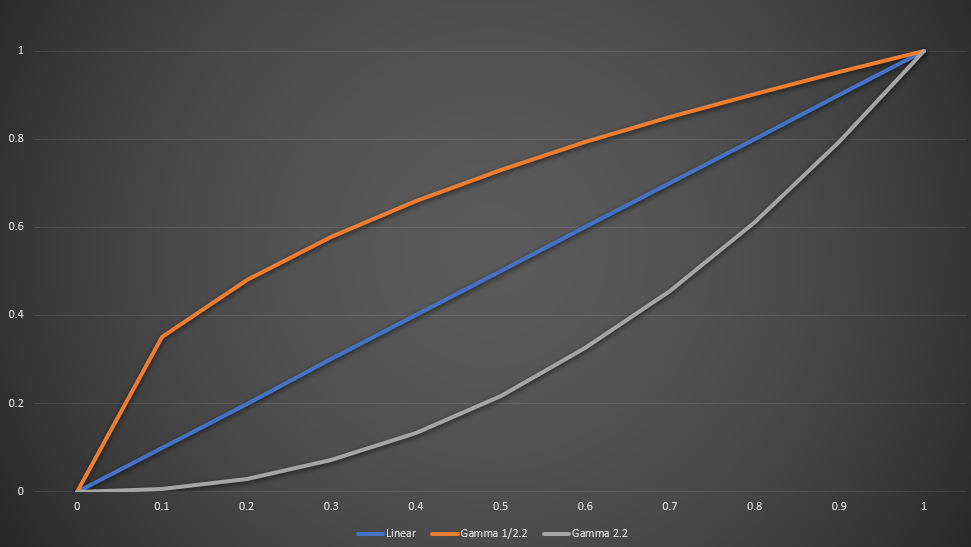

As in the bitrate case, sometimes we introduce intentional non-linearity to make better use of the bits available per-pixel. Most monitors simply assume the input coming from the cable has a pre-applied gamma of about 1/2.2, and just goes ahead and applies a gamma of 2.2 to it. This means if you wrote a program to simply output intensity values to the screen in 0 - 255 linearly, you won’t get linear intensity in monitor output out the other side. Most 8-bit image formats store the intensity values with a gamma value of around 1/2.2 to 1/2.4.

The above calibration image, when viewed with a computer monitor, should showcase your monitor’s gamma. On a correctly SRGB calibrated monitor you should see the middle row of squares matching the surrounding cross-hatch pattern.

Linear Space

In the real world, lighting happens in linear space. If you shone a flashlight at a spot, measured the intensity with a spotmeter device to be 300 nits, and then you shone another of the same flashlight at the same spots, you would now measure an intensity of 600 nits.

In computer graphics and game engines, we often aim to do all our lighting calculations in linear space, to be as physically correct as possible. This actually presents a bigger challenge than one would first expect!

Firstly, all the 8-bit images are stored in inverse gamma space - already corrected in anticipation for the monitor. This means that when reading as a texture, we would need to linearise this before doing any calculations. We could apply the transformation after sampling, but then linear interpolation and mipmap blending is still wrong in gamma space. To solve this, we can use sRGB format textures, which pretty much every GPU supports today. https://www.khronos.org/registry/OpenGL/extensions/EXT/EXT_texture_sRGB.txt

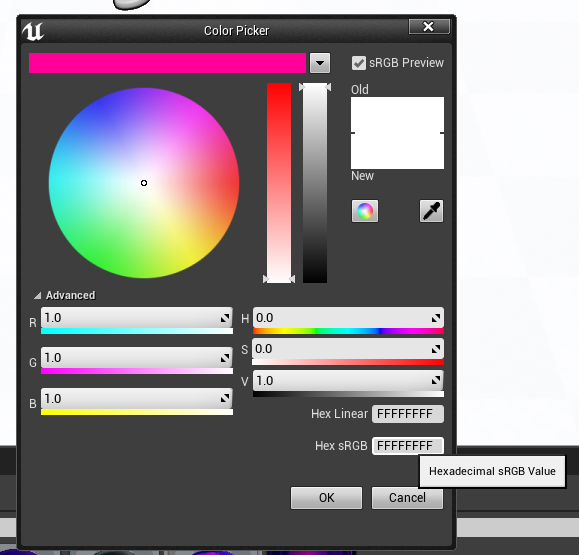

Secondly, most HEX values used by programs are stored in inverse gamma space, same as textures. This means that if we have an engine feature that takes in a HEX value as input for say, point light color, we need to linearise this value before doing the point light calculations. In fact, Unreal engine gives two options, one for linear space HEX value and one for Gamma space.

HDR Monitors

While most LDR monitors simply apply a sRGB gamma (about 2.2) to the input signal; for HDR it gets a bit more complicated. There are a few standards, big ones include BT. 2020 and. Hybrid-Log-Gamma. Most high end TVs support both!

BT2020 spec defines a nonlinear transfer function for gamma correction that is very very similar to the sRGB gamma one used in LDR monitors. For more information see https://en.wikipedia.org/wiki/Rec._2020 under “Transfer characteristics”.

HLG (Hyrid-Log Gamma) spec comes from BBC television, who wanted an HDR standard that looks somewhat remotely OK if just straight out re-interpreted as LDR sRGB on incompatible devices, but give full definition HDR on newer display devices. Game engines can generally detect whether the the attached monitor supports either standard, and act accordingly, so this property of HLG isn’t so useful in this context, but this has become an HDR standard nontheless.

Note that the TV applies the transfer functions to the HDMI signal we give it, so we want to apply them in reverse in anticipation of that.

Colour Checker Calibration.

Always check linearity to make sure the space in which any lighting is calculated in linear. Check any reference captures and HDR skyboxe panarama captures and subsequent processing and stitching for linearity issues. Make sure all alpha blending, decal blending and texture filtering is done in linear space.

In order to check a capture process, we can use a colour checker - the grey squares of a colour checker should increase linearly.

Once we are sure the capture process is linear and calibrated, we can simply point a camera at the monitor displaying a gradient (or virtual colour checker board) to check end-to-end. It wouldn’t be a bad idea to also put a virtual colour checker as an in-game asset, to check linearity (or non-linearity) of in-game buffers.